As data center networks move toward 400G and 800G speeds, choosing the right pluggable connector becomes a foundational design decision. QSFP connectors and OSFP are the two leading form factors supporting these next-generation links, but they are optimized for different priorities—ranging from port density and compatibility to power handling and thermal performance.

Understanding how they compare in real-world deployments helps network architects select the form factor that best fits their scaling strategy and operational constraints.

|

Category |

QSFP Connectors (QSFP-DD) |

OSFP |

|

Max Speed |

400G / 800G |

400G / 800G |

|

Power Budget |

~12W |

15W–16W+ |

|

Backward Compatibility |

Yes |

No |

|

Port Density |

High |

Medium |

|

Typical Use |

Ethernet data centers |

AI / HPC |

|

Ecosystem Maturity |

Very mature |

Emerging |

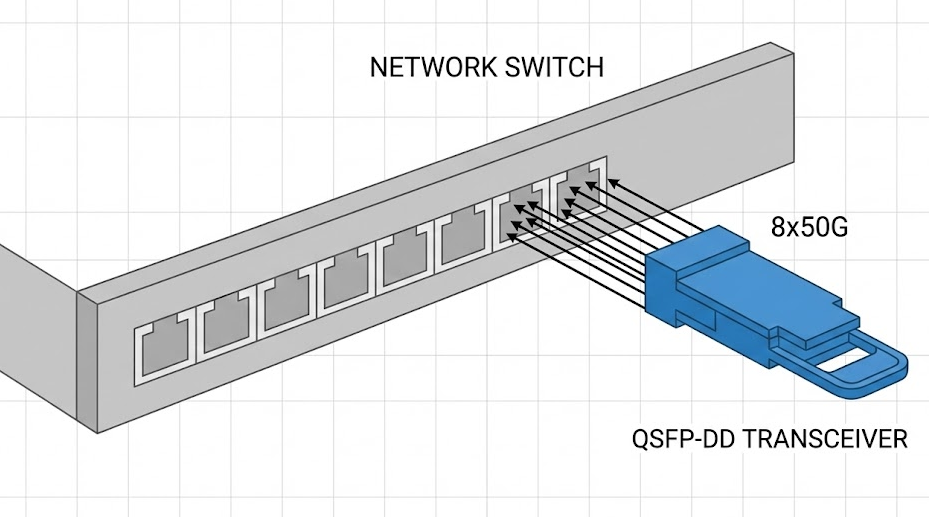

QSFP (Quad Small Form-factor Pluggable) connectors are compact, hot-swappable interfaces used to connect data center switches with high-speed optical transceivers. They’ve evolved alongside Ethernet, spanning everything from 40G and 100G to today’s 400G and 800G deployments. The most widely adopted modern variant, QSFP-DD (Double Density), increases the electrical interface to eight high-speed lanes, making it possible to deliver more bandwidth while staying within a familiar QSFP-style footprint.

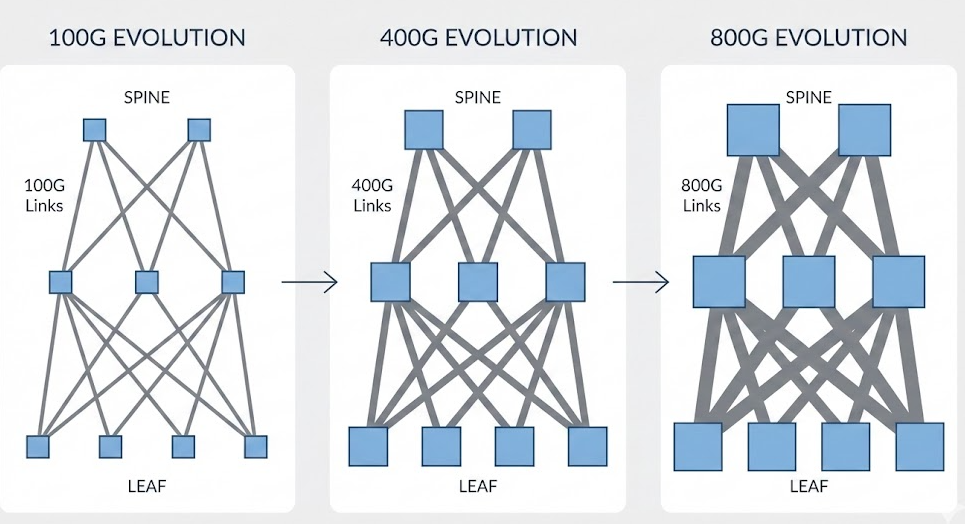

In day-to-day rollout and operations, QSFP form factors tend to win out in large environments because they make network growth easier to manage. Many data centers can scale from 100G to 400G—and progressively toward 800G—without a full switch overhaul or a major cabling rebuild, which helps keep both costs and downtime under control. That practicality is why hyperscale operators often treat QSFP-DD cage as a long-term port standard, combining a clear upgrade path with strong density and broad ecosystem support.

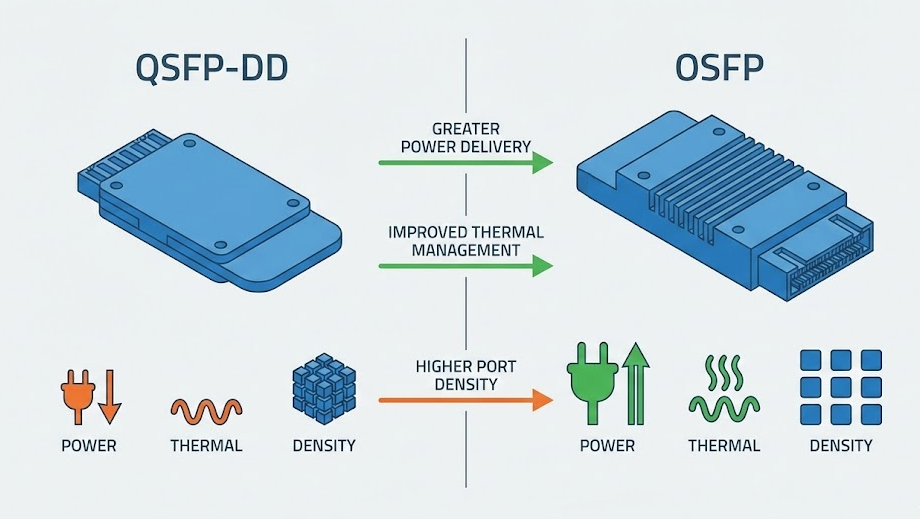

OSFP (Octal Small Form Factor Pluggable) is a newer pluggable interface aimed at next-generation 400G and 800G optics, especially those that run at higher power. It’s slightly larger than QSFP-DD, and that extra space isn’t just cosmetic—it gives hardware designers more breathing room for airflow, heat spreading, and overall thermal management.

In practice, OSFP shows up most often in environments where power and heat are the real constraints, not just port count—think AI/ML compute clusters, HPC networks, and other setups pushing sustained throughput. With more thermal margin to work with, teams can run performance-oriented optics more comfortably over long periods, particularly in dense racks where keeping temperatures stable is part of keeping links stable.

OSFP has a slightly larger body, which gives designers extra space for heat-handling features and more robust thermal layouts. QSFP cages—especially QSFP-DD cage—keep a tighter footprint, making it easier to pack more ports onto the front panel and improve overall density.

To see how these physical differences usually translate into real deployment and hardware design choices, the table below summarizes the typical impact on layout and port planning:

|

Feature |

QSFP-DD Connectors |

OSFP Connectors |

|

Width & Depth |

Compact |

Larger |

|

Heatsink |

External / limited |

Built-in |

|

Port Density |

Higher |

Lower |

In top-of-rack (ToR) switches, the limiting factor is often the physical real estate on the front panel. Operators are trying to maximize the number of high-speed links per rack unit so the leaf layer can grow in a clean, repeatable way as new racks are added. Because QSFP connectors—particularly QSFP-DD—are relatively compact, switch designs can usually accommodate more ports in the same faceplate area, boosting rack-level bandwidth and keeping port layouts consistent across rows.

OSFP can be a smart choice when modules run hotter and thermal headroom is the top priority, but the larger form factor typically reduces how many ports fit on a panel. In most ToR deployments—where standardized cabling, predictable expansion, and density matter day after day—QSFP connectors tend to be the more balanced, operationally friendly option for data center Ethernet.

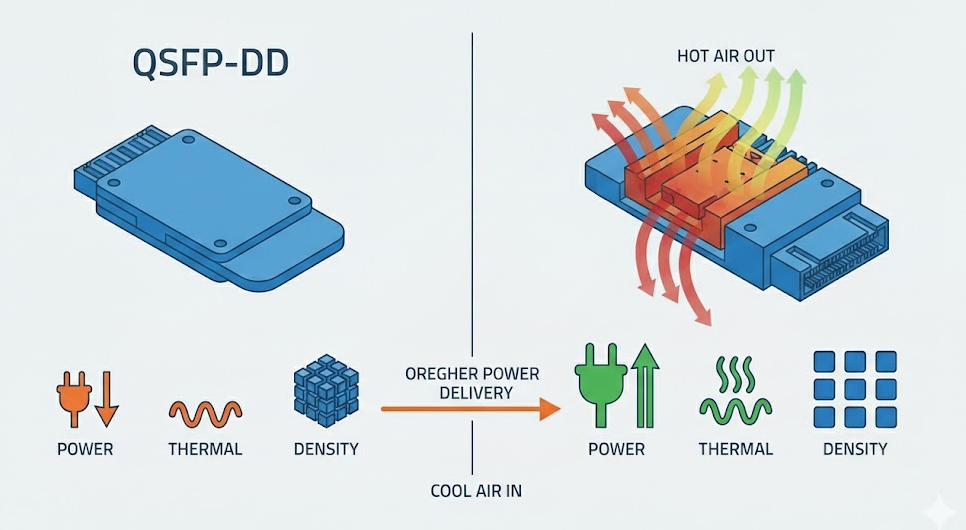

OSFP is designed for higher module power and sustained heat, making it a strong fit for optics that run close to thermal limits. QSFP cages—especially QSFP-DD—typically operate within a tighter power range, which helps switches keep high port density without overburdening cooling.

The table below summarizes the practical differences in power budget, thermal handling, and common deployment scenarios:

|

Aspect |

QSFP-DD (QSFP Connectors) |

OSFP |

|

Typical Power Budget |

~12W |

15W–16W+ |

|

Thermal Approach |

Chassis airflow / external heatsink |

More built-in thermal capacity |

|

Best Fit |

Density-focused data centers |

Thermally constrained AI/HPC |

In AI clusters, accelerators can run hot for long periods, and optics often end up in airflow that’s already “pre-warmed” by GPUs. In those racks, OSFP’s extra thermal headroom is easier to work with—modules tend to stay within spec more consistently, and you’re less likely to fight fan curves, port placement, or intermittent link issues under sustained load.

Most Ethernet switch platforms, however, are designed around the typical QSFP connectors power envelope, with cooling design, port spacing, and validation tuned for that range. So unless you’re frequently operating near thermal limits, QSFP-based builds usually slot in more smoothly—especially in standard leaf/spine designs where density, noise, and power all need to stay balanced.

When planning an upgrade, QSFP connectors are often the easier path. Since QSFP-DD remains part of the wider QSFP family, many switch platforms can continue to use existing modules such as QSFP28 (100G) and QSFP+ (40G), which helps teams move in stages instead of forcing a big-bang replacement.

OSFP, on the other hand, isn’t designed with that kind of form-factor continuity in mind. Older QSFP modules typically won’t fit, and using adapters introduces extra components that need to be sourced, validated, and supported—adding friction in day-to-day operations.

In production networks, that difference shows up quickly in both cost and risk. Being able to reuse 40G/100G optics while upgrading port speeds can reduce forklift refreshes, simplify spares strategy, and shorten maintenance windows—key reasons QSFP connectors continue to be the default choice in many enterprise and cloud data centers.

OSFP and QSFP cages use different electrical interfaces, so they aren’t directly interchangeable. While both target 400G/800G links, the pin layout and host connector design differ, meaning modules aren’t plug-and-play across the two. The table below highlights the key differences in lanes and interface design side by side:

|

Feature |

QSFP-DD |

OSFP |

|

Electrical Lanes |

8 × 50G |

8 × 50G |

|

Pin Layout |

QSFP family standard |

Unique OSFP layout |

|

Adapter Needed |

No |

Yes |

QSFP connectors are the go-to choice for most Ethernet deployments, while OSFP is a more specialized option—best suited to situations where higher-power optics and long-running workloads make thermal headroom the deciding factor, even if that means sacrificing some port density.

Across cloud, enterprise,and colocation networks,QSFP connectors are widely adopted for 400G/800G switch ports because they align with how data centers typically grow: gradually, in controlled phases. Many teams can move from 100G to 400G (and plan toward 800G) without disruptive platform changes, keeping cabling practices and day-to-day operations consistent while still maintaining strong port density on ToR and spine switches. That balance—density, a reasonable power envelope, and a mature ecosystem—is a major reason QSFP-DD remains common in Tier-1 architectures.

In the same direction, GLGNET provides a broad portfolio of QSFP connectors and optical modules for 100G/400G/800G networks, with a focus on interoperability and steady performance in production—helping upgrades stay predictable rather than adding extra operational complexity.

OSFP appears more often in performance-first environments—AI/ML training clusters, HPC fabrics, and other bandwidth-heavy systems—where optics may draw more power and thermal limits quickly become a hard constraint. It’s typically used with higher-power modules and short-reach, ultra-high bandwidth links, including AI networking fabrics and InfiniBand-style deployments, where maintaining stable links under sustained load can be just as important as maximizing the number of ports on the front panel.

Conclusion

QSFP connectors (especially QSFP-DD) are the practical default for Ethernet data centers, combining high density, backward compatibility, and a mature ecosystem for smoother upgrades. OSFP is better suited to AI/ML and HPC environments where higher-power optics and extra thermal headroom matter most. If you’re planning a 100G/400G/800G rollout, explore GLGNET’s QSFP connectors and optical modules to support a reliable, interoperable deployment.

Read more:

https://www.glgnet.biz/articledetail/top-5-sfp-cage-manufacturers-in-2026.html

https://www.glgnet.biz/articledetail/what-are-sfp-ports-used-for.html